Workflow Fields Configuration Overview

Introduction

This page is designed to help illustrate each field's purpose in a given workflow and some tips on configuring them properly.

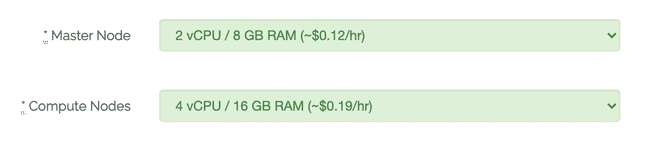

High Performance Computation (HPC) Resources

When you launch a Metworx Workflow, you will select a combination of cloud resources consisting of a head node and compute nodes. This selection should be determined based on the needs of your scientific or statistical analysis and may vary. It is referred to as an HPC cluster, and will remain running until you delete the workflow. There are several head node size and compute resources available. See working in metworx for more details.

The Head Node (aka Master Node) is where all of the software you'll be interacting with will be running. For example, this is where the RStudio / Desktop software is running from. The head node is always on and running while your workflow is Active.

When configuring your master node's vCPUs and RAM, in most cases 2 vCPUs should be computationally sufficient. For more detail around rightsizing workflows: https://kb.metworx.com/Users/Getting_Started/rightsizing-workflows/

The Compute Nodes are what do the heavy lifting of processing your jobs and simulations.

NOTE: When defining the configuration of compute nodes in your workflow, be aware that this configuration will be per compute node.

Therefore from the screenshot above, each compute node within your workflow will have 4vCPU / 16GB RAM.

Additionally, the cost you see (~$0.19/hr) will also be the cost per node.

Whether you have 1,000 cores for 1 hour or 10 cores for 100 hours, the biggest factor in cost calculation is how long your model will take to run. This includes the runtime is plus 20 minutes of idle time before the compute nodes shut down.

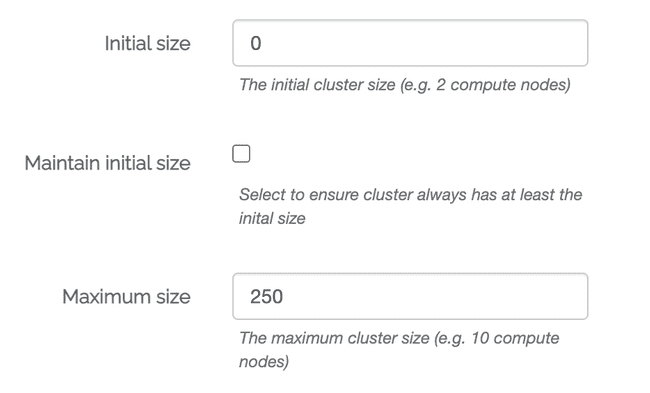

HPC Cluster Size Configurations

There are 3 size configurations when creating a new workflow:

Initial Size

Maintain Initial Size

Maximum Size

Initial size is the number of compute nodes that will be spun up when the HPC cluster is initially created.

Maintain initial size ensures that the HPC cluster always maintains at least the defined initial size. In other words, it prevents your workflow from autoscaling below the Initial size you have selected. Extreme caution should be exercised when utilizing this option as it can have severe cost implications.

Maximum size is the max number of compute nodes you can spin up in a HPC cluster.

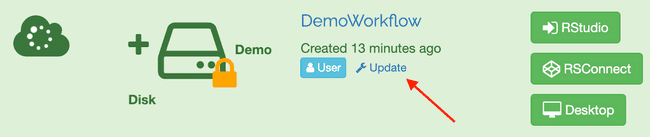

Updating HPC Size Configurations

You have the option to update your HPC configuration settings after you've spun up a HPC cluster within a given workflow.

To update an Active workflow, click the blue 'Update' link that has a wrench to the left of it as shown in the screenshot below.

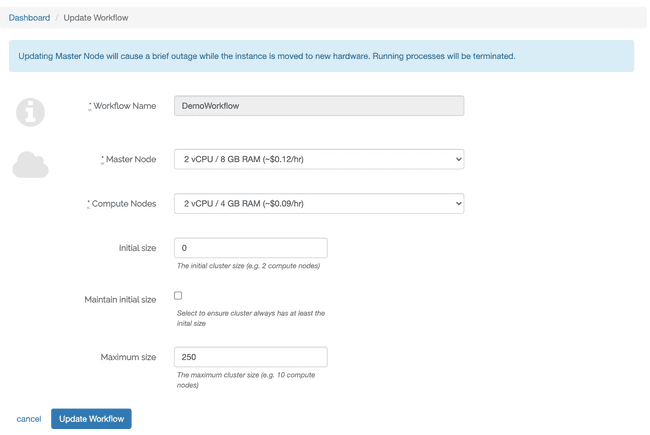

After clicking the Update link, you will be prompted with the Update Workflow screen.

You can update the Compute Nodes and Maximum Size configurations without any impact to the running machine or your existing running jobs. However, if you update the Head Node configuration, your workflow will need to reboot, which will result in the termination of any running processes and brief outage to your workflow.