Running Monolix in Parallel

Introduction

Monolix allows the user to utilize parallel processing to improve the speed of modeling runs. This is enabled by default on Metworx. This article describes several ways to take advantage of this parallel processing on Metworx.

Using the GUI on the master node

If you are using the Monolix GUI in the Metworx virtual desktop, your models will be running on your master node. By default, it will use all available cores on your master node.

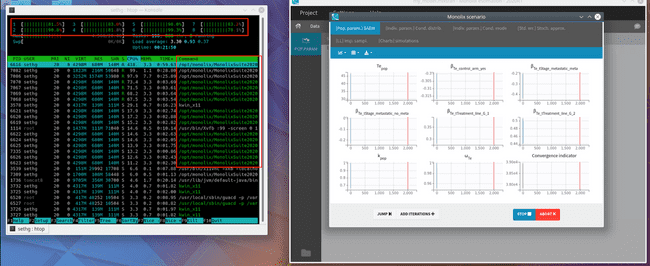

You can verify this, as well as monitor your modeling process, by opening a terminal and using the htop Linux utility. Simply click the "Konsole" icon on the bottom left to open a new terminal window. Then type htop and press enter.

Notice that, when your model is running in Monolix, you will see all of the cores lit up green to indicate that they are doing work, as well as a number of Monolix subprocesses in the process list.

Running models on the SGE grid

You can also use the terminal (either in the virtual desktop, in Rstudio, or via SSH) to submit Monolix models to run on the compute nodes of the SGE grid. This is primarily beneficial because it allows you to use Metworx's auto-scaling capabilities. That is: you can submit some number of models and Metworx will automatically scale up enough compute nodes to run those models, and then turn them off when the models are finished.

Create submission script

First, you will create a shell script for submitting to run on the SGE grid. Open a blank text file, copy the following into it, and save it as monolix_sge.sh. Likely, you will want to save this to your Metworx disk, in the same directory as your Monolix project file.

#!/bin/bash

#$ -cwd

#$ -V

#$ -o submit-monolix-$JOB_ID.out

#$ -e submit-monolix-$JOB_ID.err

#$ -pe orte 4

/opt/monolix/MonolixSuite2020R1/lib/monolix --no-gui -p $1There is more detail on what this script is doing in the Appendix at the bottom of this document.

Setting the number of cores

The -pe orte 4 line in the above script specifies that this model should be parallelized across 4 vCPU's. There are two important points here:

- You must change this number to use more or less cores for this model.

- Do not set this number to anything larger than the size of your individual compute nodes.

Call the submission script

The shell script above takes one argument: the path to the Monolix project file to run. This should either be an absolute path (safest) or relative to the location of the monolix_sge.sh shell script that you will submit.

To run this on the SGE grid, simply pass it to qsub. An example call to run my_model.mlxtran:

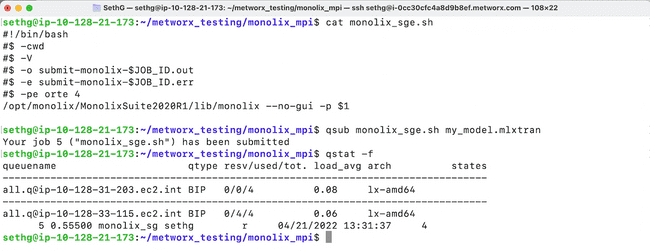

$ qsub monolix_sge.sh /path/to/my_model.mlxtranMonitor the model submission

The simplest way to monitor jobs on the SGE grid is with the qstat -f command. The screenshot below shows submitting the model and then verifying that it is running on the grid with qstat -f.

Appendix: More detail on the submission script

This section provides extra detail on the SGE submission shell script, and can generally be skipped by most users. The above scripts do the following:

-

Calls the full path to the Monolix command line executable

- In this example we use

/opt/monolix/MonolixSuite2020R1/lib/monolixthough this can be changed to a different installation if desired. - Monolix needs to be installed at this path on all the worker nodes as well as the master node. (On Metworx it is, so this works.)

- Also sets the

--no-guiflag, because we are calling from the command line and do not which to launch the GUI.

- In this example we use

- Passes the path to the Monolix project file through to the

-pargument. -

Sets some SGE options (everything preceded by

#$)-pe orte 8to reserve the right number of slots on the grid (in this case 8). Change this value to use more or less threads for your Monolix job.-cwdchanges working directory to the directory of the shell script.-Vpasses through environment variables to the worker nodes.-oand-especify log files forstdoutandstderrrespectively.