Parallel Computing Intro

Scope

Many compute tasks benefit from being run in parallel (i.e., executing multiple parts of the task simultaneously). You can do this on a single machine, such as your laptop or the Metworx workflow head node, or on a cluster or grid of machines.

This article covers the basic principles of how computers perform work and then builds on those to explain some fundamentals of parallel computing.

If you're comfortable with these topics and would like to read about grid computing (i.e., running jobs on your compute nodes), see Grid Computing Intro.

Computing Basics

It is helpful to have a high-level understanding of how computers work before considering how a computer performs work in parallel.

Computing Terminology

CPU - The central processing unit (CPU) is the principal part of any digital computer system, generally composed of the main memory, control, and arithmetic-logic units.

Core - The core, or CPU core, is the "brain" of the CPU. It receives instructions and performs calculations or operations to satisfy those instructions. A CPU can have multiple cores.

Process - A process is a collection of code, memory, data, and other resources. In the simplest terms, it is an executing program.

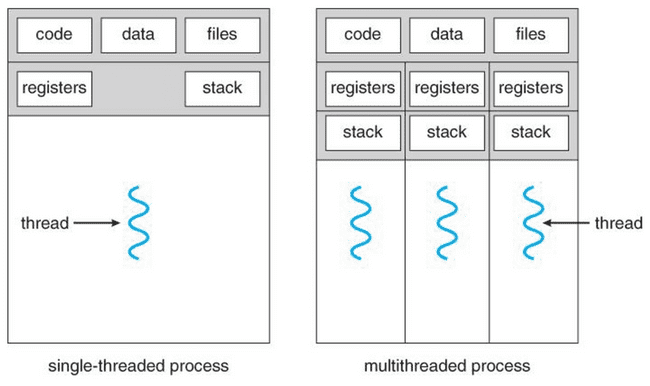

Thread - A thread is the basic unit to which an operating system allocates processing time. One or more threads run in the context of any process.

CPU Core Execution

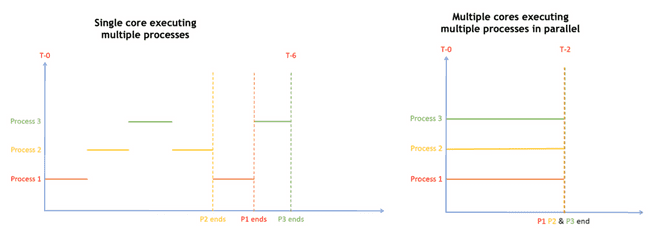

A single core executes a single process (actually a single thread) at any given time. However, when the current process is waiting on an activity, such as user input or reading from disk or network, the operating system (OS) scheduler constantly swaps which process is executing.

Parallel Processing

Some compute tasks benefit from running multiple parts of the task in parallel. This requires multiple cores because a single CPU core only executes one process at a time (see the caveat on hyperthreading, below).

Here we review the basic concepts of parallel processing and then demonstrate how different compute tasks may or may not be suited for parallelization.

Processes and Threads

Every process consists of one or more threads. Threads are the smallest unit of execution within a compute process. They're considered lightweight in terms of compute load because they share the memory space of the process that spawned them.

Single-Threaded Processes

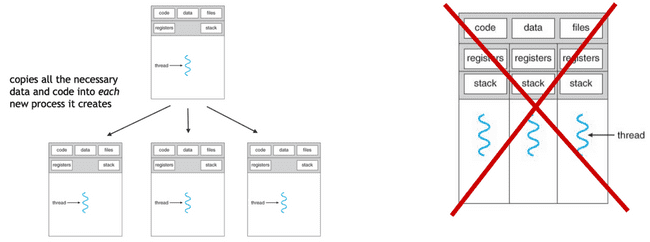

Single-threaded processes have their own memory space and do not share resources with other processes.

Launching single-threaded processes is resource-intensive because they don't share memory or file resources. All data files must be copied to each thread, and accessing, copying, and moving data is extremely slow compared to processing data. This overhead sometimes overwhelms the gains of processing in parallel.

Both R and NONMEM use single-threaded processes. Every time you parallelize in R or NONMEM, single-threaded processes are launched using this computationally intensive startup process. The only exception to this is using "forking", which has its own drawbacks, discussed below.

Hyperthreaded Processes

Hyperthreading allows a single CPU core to run two or more threads at once. In AWS, which uses hyperthreading by default, eight vCPUs (virtual CPUs) correspond to four physical CPU cores that can each run two threads. There are some nuances to hyperthreading that are beyond the scope of this guidance; however, for the purposes of this article, know that conceptually it is the same as twice as many cores, while in reality it is typically 1.5x as fast.

When to Parallelize?

When deciding whether to parallelize your code, you'll need to consider the number of compute nodes to parallelize across, the time-cost associated with moving the data to each of those nodes, and the time it takes to actually do the computational work. You can then compare that to the time required to do that process on a single thread.

Example 1: What happens during parallel processing?

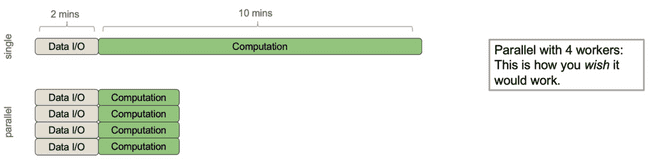

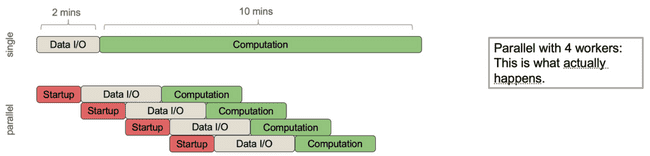

Consider a process that includes roughly two minutes for data input/output (I/O, i.e., time to move data from disks or between processes) and ten minutes of actual computation (doing math, etc.).

The above figure shows the process running on a single thread and what would ideally occur if the process is run on four worker nodes. This ideal, yet unrealistic scenario shows that, even with the repeated time-cost of data I/O, the process would run almost four times faster.

In reality, when we parallelize this relatively short process across four worker nodes, here's what happens:

The parent process spends time starting up each of the child processes that do the work, then it passes the necessary data to each of them (data I/O period) and finally carries out the computational work. Importantly, the parent process can only perform one task at a time, which means any communication with child processes is done sequentially. So, although you are distributing the computational work among four worker nodes, there is an associated cost for starting up and passing data I/O to each node.

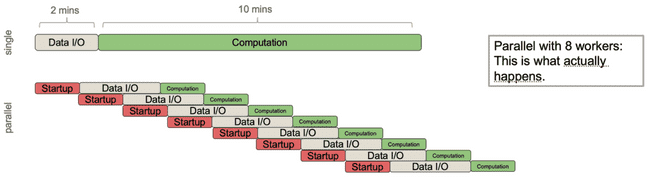

The addition time-cost associated with starting up a child process and data I/O must be considered when deciding whether to parallelize your code and, if so, how many nodes to parallelize across. For example, imagine that you instead chose to distribute this same job over eight workers in an attempt to speed the process up even further:

The parallelized job now takes even longer than run single-threaded job. This is because the parent process must spend much more time starting up each child process and sending them data than it gains from comparitively short computation time on each child process. This is a big pitfall of parallel processing. In these situations, distributing to more cores can sometimes slow down your total run time.

Example 2: A good candidate for parallel processing

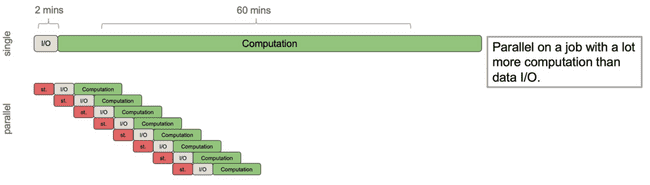

Consider a process that still has roughly two minutes of data I/O time but now includes roughly an hour of computation time.

Parallelizing this process across eight nodes results in significant time savings. In this example, the startup and data I/O times are relatively small compared to the compute time. Thus the gains of spreading the computational work across multiple nodes far outweigh the work done by the parent process to startup those workers and send them data.

Start Small and Avoid Over-Parallelization

Over-parallelizing (i.e., using more worker nodes than your computation merits) slows down your code, sometimes significantly. It's a good idea to run some testing iterations on jobs that need to be parallelized. Start small (e.g., four workers) and work your way up, determining the optimal number of workers before kicking off a potentially long-running job.

See the "Testing different numbers of threads" section in this article: Running NONMEM in Parallel: bbr Tips and Tricks.

Nested Parallelism

Nested parallelism is when you have jobs executing in parallel and those jobs attempt to launch more jobs to execute in parallel. This should be handled with care.

Unintended Nested Parallelism

Unintentional parallelization can occur when something you’re running is parallelizing under the hood. Two common examples include:

- Processes doing heavy linear algebra (BLAS and LAPACK)

- Using

data.table::fread()

There's a good summary of this issue in the “Hidden Parallelism” section of Roger Peng's R Programming for Data Science.

Intentional Nested Parallelism

Sometimes you have two (or more) levels of computation that could be parallelized. For example:

- Running multiple NONMEM models at once and parallelizing each model to run on multiple cores.

- Running a Bayesian model with four chains, where each chain is run in parallel and computation within a chain is also parallelized

- Splitting a data set into subgroups and running multiple simulations on each subgroup.

These tasks can certainly be accomplished by running them on a single very large machine that has enough cores for all the workers that are spawned. However, in many cases, it is cheaper--both in run time and AWS costs--to do this work on The Grid!

Sidebar: Bootstraps

If running a lot of models at once (e.g., a bootstrap) then you can easily run into the same pitfalls as BLAS or data.table. In this case, it is usually best to run each model on a single core and parallelize across models instead, for example, with bbr::submit_models().

Additional Learning

To learn more about applying these concepts on Metworx, please see the following: