What is a Workflow?

Scope

This page outlines the core components of a Metworx workflow.

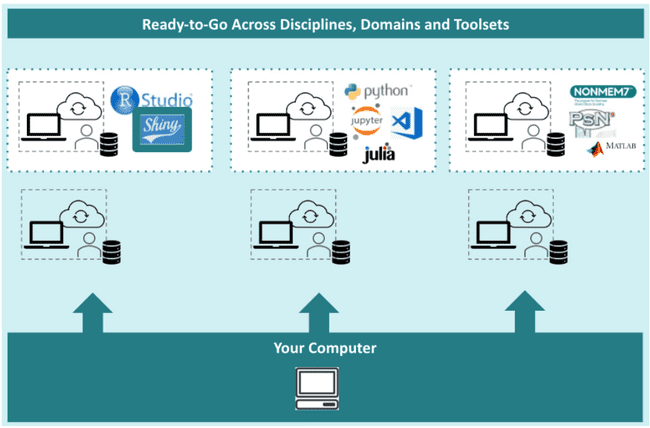

A Metworx workflow is a cloud based, personal analytical workstation containing a combination of cloud resources, a disk, and a selection of software (blueprint). They offer each user access to powerful high performance computing (HPC) resources for scientific and statistical analysis without the need for a shared grid. Each workflow contains all tools and software within a controlled computing environment.

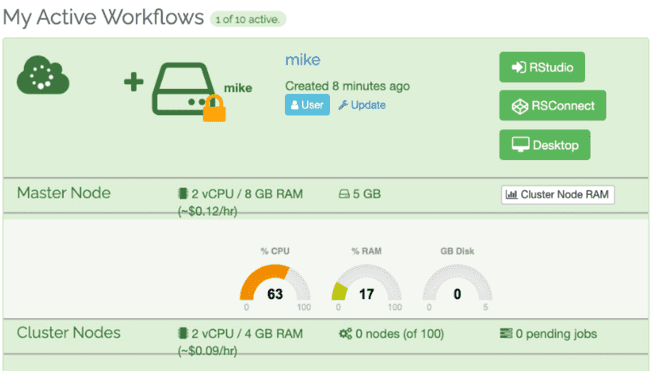

Once launched, a Metworx workflow appears on an interactive dashboard. Details including workflow performance, disk name, and workflow name are displayed based on the users selected preferences. Launch buttons to access software for analysis are green and white buttons.

Metworx Workflow Components

Each workflow can be broken out into three key elements:

- The blueprint: a combination of select software and some underlying technical components

- A disk: the place data can be shared and persist when a workflow is shut down

- HPC resources: a combination of head and compute cloud resources

Blueprint

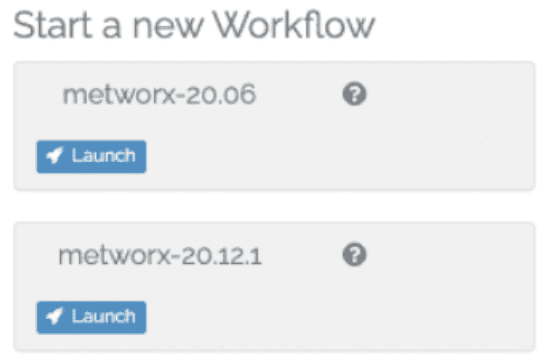

A blueprint includes a selection of cutting edge, version controlled software selected for use in scientific and statistical analyses and some underlying technical components. The most recent Metworx blueprint generally has the latest version(s) of all software, while our legacy blueprints retain the software versions available at the time the blueprint was released to ensure backwards compatibility. A full list of software provided in each blueprint can be found here.

Metworx blueprints are labeled with a calendar-versioned naming convention: <two digit year>.<month> based on release date. For example, a blueprint labeled 20.12 was originally released in December 2020, and a blueprint labeled 20.06 was released in June 2020.

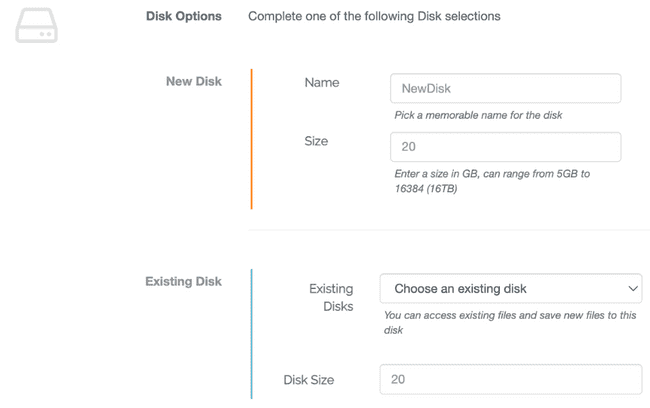

Disk

Each Metworx workflow requires a disk to work with data. To launch a Metworx workflow, either a new disk must be created or an existing disk selected. Each disk requires a name and a disk size.

For more detailed instructions, please refer to the Working with Disks article.

HPC Resources

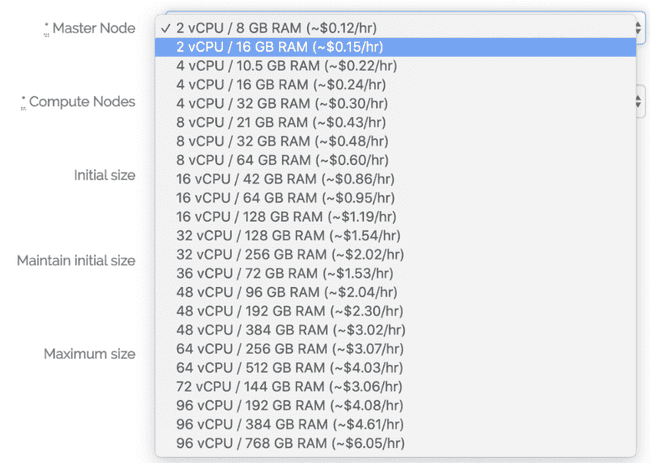

When you launch a Metworx workflow, you select a combination of cloud resources consisting of a head node and compute nodes. This selection should be based on the needs of your scientific or statistical analysis and may vary. This is referred to as an HPC cluster, and remains running until you delete the workflow. There are several head node sizes and compute resources available. See HPC options for more details.

Metworx uses an autoscaling approach to cloud resources. This means that resources are used or "scaled up" while computing jobs are run, and then "scaled down" when not in use, such as after a compute job completes. This allows access to the compute resources you need, but only when you need them, thereby allowing for significant cost savings.

Head Node

The scientific and statistical software available on Metworx runs on the head node. This includes RStudio products, and the remote desktop interface where Matlab, Monolix and other statistical and graphical software can be accessed. The selection of computational power and memory required to run analyses using this software should be considered when making a head node selection.

Compute Nodes

Compute nodes are used for submitting command line jobs to the grid, such as NONMEM jobs or R scripts run in batches. Using compute nodes keeps the head node free from being overwhelmed if large volumes of work are being performed. Recommendations on how to select different HPC configurations are available in the HPC options article.