Grid Computing Intro

Scope

Many compute tasks benefit from running in parallel (i.e., executing multiple parts of a task simultaneously). This can be done on a single machine, such as your laptop or Metworx workflow head node, or on a cluster or grid of machines.

This page covers grid computing--utilizing compute nodes in a high-performance compute cluster--your Metworx workflow.

This content assumes a basic understanding of parallel computing. If you are not sure whether you have this understanding, please see Parallel Computing Intro for foundational principles of how computers work and basic concepts of parallel computing.

SGE and Slurm job schedulers

This page primarily discusses using SGE as the job scheduler for submitting jobs to the grid. Blueprints 24-04 and later have the option to use Slurm (instead of SGE) as the job scheduler. See "SGE-to-Slurm Quick Start Guide" for information on using Slurm.

The Grid

Parallel computing within a single machine works well for many tasks. For large tasks, however, and tasks involving nested parallelism, using a grid or cluster of multiple machines has many advantages. For example:

- Auto-scaling: the grid launches new compute nodes (or workers) when there are tasks to run and then tears them down when the tasks are finished. This saves on compute costs since you only pay for the resources that you actually need.

- Job queueing: the grid keeps your jobs in a queue until it has the resources to run them.

- Spot instances: You can configure Metworx to use AWS spot instances for your compute nodes. Spot instances tap into AWS's excess capacity, which can reduce your cloud computing costs.

Each Metworx workflow serves as your own personal computing grid.

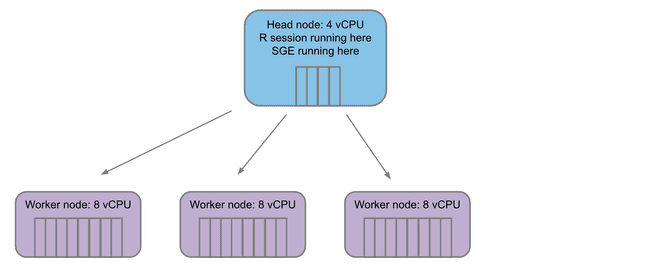

The Structure of the Grid

Each grid has a single head node. This is the machine that coordinates which jobs, if any, are sent off to compute nodes. The head node also runs your RStudio session and other programs.

When you submit jobs to the grid, the head node launches a number of compute nodes (based on the workload submitted) and fills a queue for the compute nodes to pull jobs from.

Configuring Your Grid on Metworx

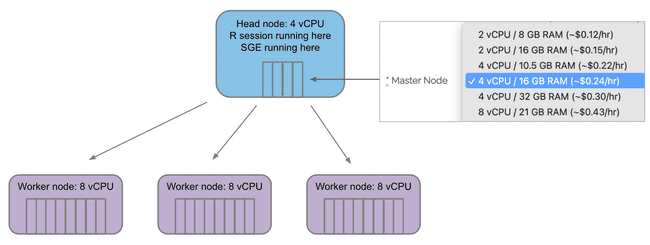

When launching your Metworx workflow, use the Master Node drop-down menu to select how many vCPU cores you would like on the head node. Remember, you will only ever have one head node.

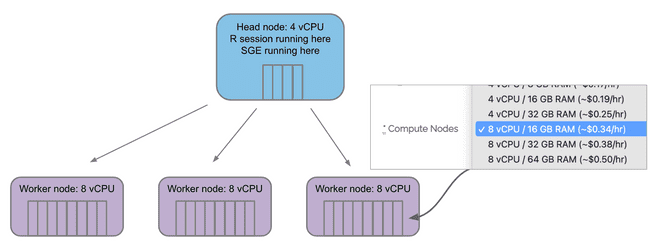

Use the Compute Nodes drop-down menu to select how many vCPU cores are on each compute (worker) node.

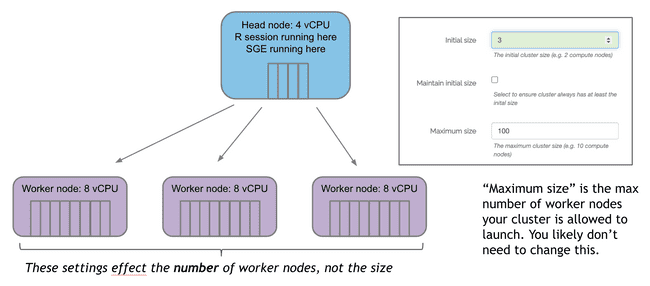

Initial size specifies how many compute nodes are created when you launch your workflow.

If you don't submit any jobs, these will countdown to terminating unless you check the box Maintain initial size. This box should be used with caution since it overrides auto-scaling and can lead to idle resources. Unless you have a good reason for not waiting for compute nodes to scale up (typically 3-5 minutes from when jobs are submitted), it is much more cost-effective to leave this box unchecked and let the auto-scaling handle the number of worker nodes.

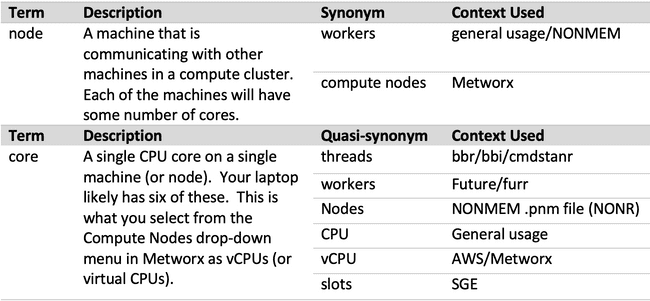

Grid Terminology

Grid computing uses a wide range of terminology to describe similar concepts. First, the terms "grid" and "cluster" are often used interchangeably, although there are slight technical differences). Additionally, the head node is often called the "master node", though that term is being phased out. Likewise, the compute nodes are sometimes called "workers" or "worker nodes".

Beyond that, the concepts of nodes (physical machines) and cores (CPU cores on those machines) have many quasi-synonyms of their own.

You may also notice that NONMEM (and most people in general) refer to nodes as workers, while the workers argument in functions from the future and furrr packages actually refers to the number of jobs to spawn (likely equal to your number of cores). Further, the NONMEM .pnm file refers to nodes as WORKERS and cores as NODES.

Feel free to use the above table as a resource to resolve the various terminology.

Submitting Jobs to the Grid

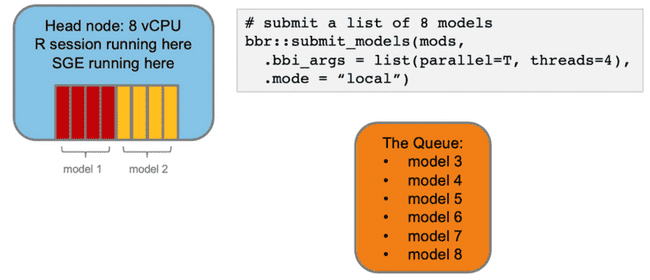

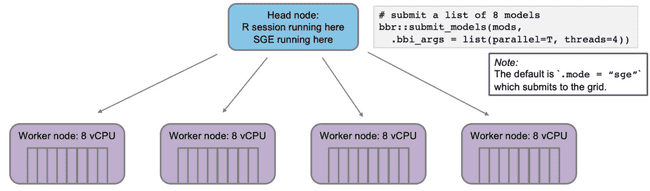

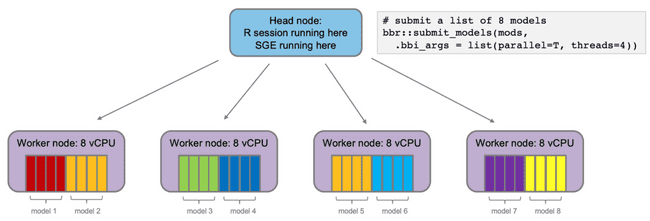

The diagrams below illustrate what happens when you submit jobs to be run on the worker nodes of the grid. In this case, we're using bbr to submit NONMEM jobs from R. As a side note, if you're using bbr to submit NONMEM jobs on the grid, consider reading Running NONMEM in Parallel: bbr Tips and Tricks for more details and best practices.

Imagine you want to submit some NONMEM models to run on your head node via bbr. You specify that they should run in parallel and each model should use 4 cores (threads).

Now, imagine you want to submit some NONMEM models to run on the grid via bbr. Again, you want them to run in parallel and each model should use 4 cores (threads).

The head node will submit the jobs to the SGE (Sun Grid Engine) queue, which will distribute them to the worker nodes. With 4 worker nodes, all models will run simultaneously--a much more efficient process.

Further Learning

To learn more about applying these grid computing concepts on Metworx, please see the following: